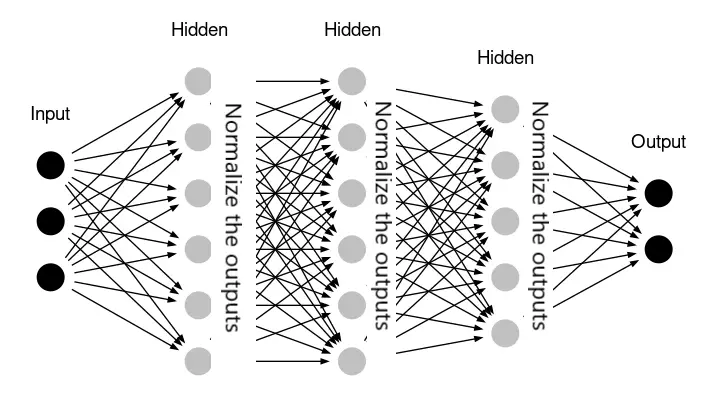

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

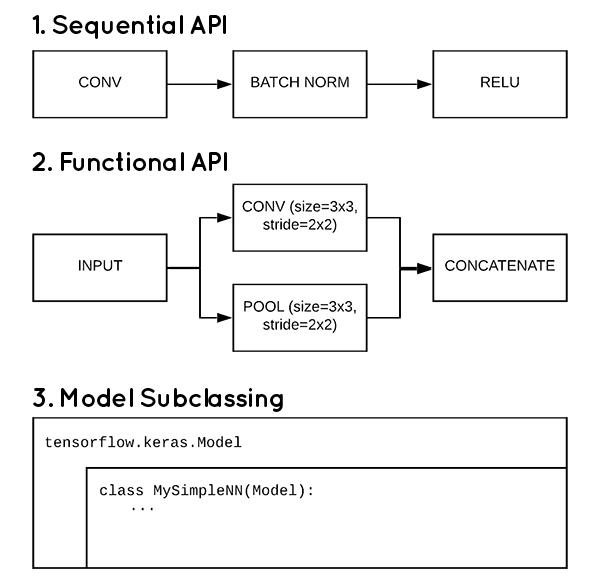

3 ways to create a Keras model with TensorFlow 2.0 (Sequential, Functional, and Model Subclassing) - PyImageSearch

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

trainable flag does not work for batch normalization layer · Issue #4762 · keras-team/keras · GitHub

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

trainable flag does not work for batch normalization layer · Issue #4762 · keras-team/keras · GitHub

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

tf.keras and TensorFlow: Batch Normalization to train deep neural networks faster | by Chris Rawles | Towards Data Science

François Chollet on Twitter: "10) Some layers, in particular the `BatchNormalization` layer and the `Dropout` layer, have different behaviors during training and inference. For such layers, it is standard practice to expose

![Solved] Python Loss of CNN in Keras becomes nan at some point of training - Code Redirect Solved] Python Loss of CNN in Keras becomes nan at some point of training - Code Redirect](https://i.stack.imgur.com/625eB.png)